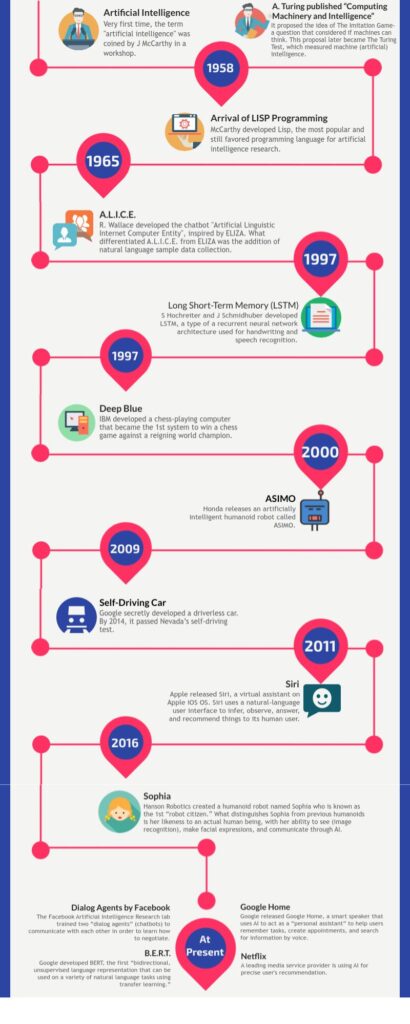

The term “artificial intelligence” was first used by John McCarthy in 1956 at the Dartmouth Conference, whose aim was to study intelligence in detail, so that a machine could be built to simulate it.

In the 1960s and 1970s, AI research focused on the development of expert systems designed to mimic the decisions made by human specialists in specific fields. These methods were frequently used in sectors such as engineering, finance and medicine.

In the 1980s, research programs began to take an interest in machine learning, and were able to solve algebra problems formulated in a verbal, non-numerical way; this is when language was first used by AIs – eventually leading to the GPT Chat we know today. Neural networks were created and modeled on the structure and functioning of the human brain.

The 1990s saw a shift in focus towards machine learning and data-driven approaches, thanks to the increased availability of digital data and progress in computing power. This period saw the rise of neural networks and the development of support vector machines, which enabled AI systems to learn from data, resulting in improved performance and adaptability. It was also at this time that natural language began to be simulated in a particularly realistic way, notably by the STUDENT programs or ELIZA – the very first chatbot.

Things have really accelerated over the last 20 years. In the early 2000s, progress in speech recognition, image recognition and natural language processing were made possible by the advent of deep learning, a branch of machine learning that uses deep neural networks.

Finally, in the 2010s, AI became a genuinely visible part of our daily lives in the form of smartphones, virtual assistants, chatbots present on many commercial sites, right up to the GPT revolution.