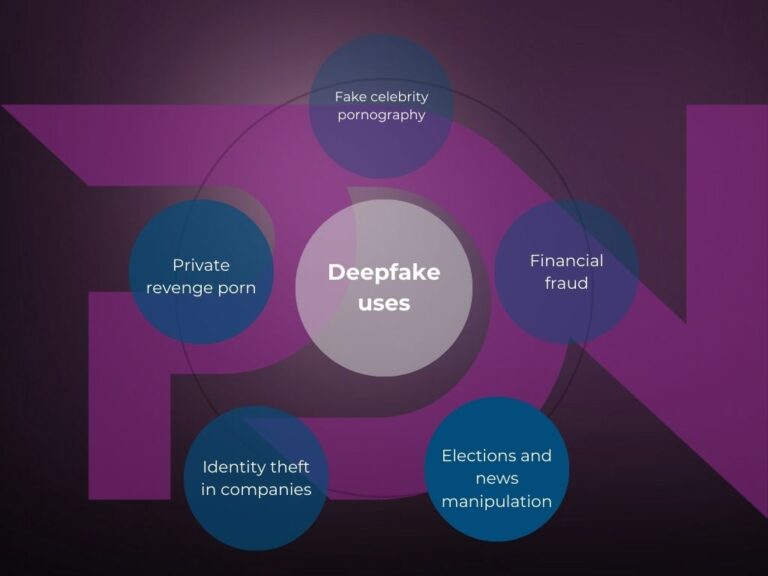

Manipulation, disinformation, defamation or humiliation, discredited personalities (mostly female stars) are at the forefront. Private revenge porn, now that the technology has become much more accessible, is also one of the most frequent uses of this technology.

In a study carried out in September 2019, artificial intelligence company Deeptrace actually found 15,000 deepfake videos (twice as many as nine months previously). Over 96% of this content was pornographic, and 99% of them matched female celebrity faces with porn stars. Inadequate moderation on many social networks, and particularly on Twitter / X, was repeatedly highlighted. Politics are also particularly targeted: fake images compromising high-profile personalities are generated, and fake phone calls from Joe Biden were recently made, with the aim of discouraging voters from casting their ballots in the forthcoming presidential election. False information about ongoing armed conflicts is also created in this way. Entire disinformation campaigns can thus be created in just a few clicks, by virtually anyone, even with limited technical skills and at low cost.

But this technique is now also creating major security problems in companies: recently, several employees of major companies have been tricked, using phones reproducing the voices of executives, and even a fake meeting on Zoom, into making transfers of tens of millions of dollars on the fictitious orders of hierarchical superiors authorized to request such operations. A VMWare report revealed a 13% increase in deepfake attacks last year, with 66% of cybersecurity professionals saying they had witnessed them in the past year. Many of these attacks are carried out by e-mail (78%), which is linked to the increase in Business Email Compromise (BEC) attacks. This is a method used by attackers to access corporate e-mail and impersonate the account owner in order to infiltrate the company, the user or partners. According to the FBI, BEC attacks cost businesses $43.3 billion in just five years, from 2016 to 2021. Platforms such as third-party meetings (31%) and business cooperation software (27%) are increasingly used for BEC, with the IT industry being the main target of deepfake attacks (47%), followed by finance (22%) and telecommunications (13%). It is therefore essential for companies to remain vigilant, and implement deepfake detection technology and robust security measures to protect themselves and their organizations against this type of attack.

Identity theft through deepfakes has thus become a worrying problem for corporate security. What’s more, as technology rapidly evolves, these false images, voices and videos are becoming increasingly difficult to identify. The problem has now moved well beyond the private sphere, and can no longer be ignored by governments or businesses alike.